Look, here’s the thing nobody wants to admit: 87% of producers are already using some form of AI in their workflow. Not because they’ve been brainwashed by tech startups (okay, partially that), but because AI tools actually work—when used correctly. The problem isn’t AI itself. It’s the difference between using AI to enhance your creativity and using it to replace it. 1

In 2026, the music industry is split between two paranoid camps: musicians convinced AI will steal their jobs, and startups convinced AI will replace all musicians. Both are missing the point. The real innovation happening isn’t in full-track generators like Suno or Udio (which are objectively lazy and creatively bankrupt). It’s in assistive tools that let you work faster without losing your voice.

The boundary between “tool” and “threat” is thinner than you think. So here are eight ways to stay on the right side of it.

1. Let AI Master Your Tracks (Then Ignore Its Suggestions If You Want)

LANDR changed the game in 2015 by making professional mastering accessible to bedroom producers without a $1,500 bill. Today, iZotope Ozone and other AI mastering tools do the same thing, except better. They analyze your mix and suggest frequency balancing, dynamics control, and loudness optimization in seconds. 2

The catch? You’re still steering the ship. iZotope’s “Custom Flow” lets you override everything—set your own loudness targets, choose which processing chains to apply, adjust by genre. The AI proposes; you dispose. This is the gold standard of ethical AI usage: the machine does the math, you make the art.

Warner Music Just Licensed Its Catalog to an AI Company—Here’s Why That Matters

2. Steal Stems From Any Track (Without Stealing the Whole Song)

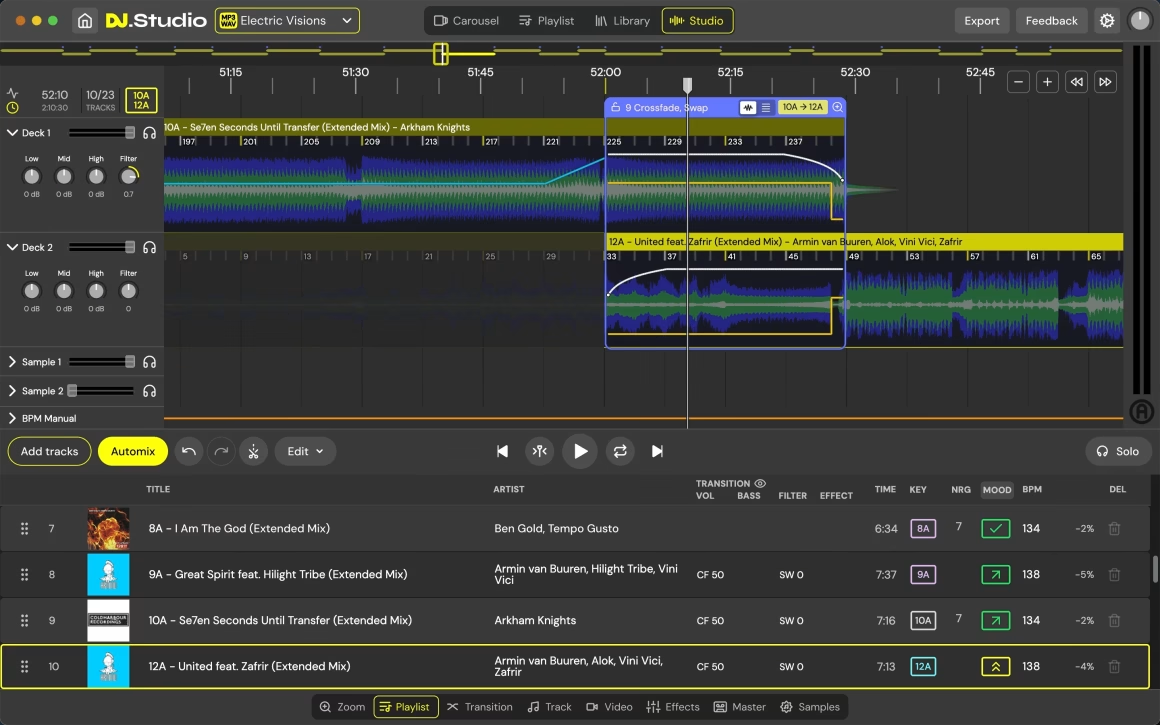

DJ.Studio and AudioShake made stem separation—breaking a finished track into vocals, drums, bass, and instruments—accessible to everyone. A decade ago, you needed the original session files to remix properly. Now you just upload an MP3.

Here’s why this matters: You can finally remix tracks the way samples culture always worked—taking a piece and rebuilding it. Except now you’re not hoping a bootleg acapella sounds decent; you’re getting AI-separated stems with 94% accuracy. A DJ can layer a vocal over a completely different beat, or strip percussion for a live remix. That’s creative control your parents’ generation never had.

The caveat: If you release that remix, you need rights clearance. The AI is neutral; the law is not.

3. Stop Scrolling Through 10,000 Samples—Let AI Do It For You

Splice’s “Create” feature and iZotope’s recommendation engines solve the most soul-crushing part of production: finding compatible sounds. Upload your loop, and the AI analyzes its sonic signature—key, tempo, energy, instrumentation—then suggests samples that actually fit. 3

This isn’t the AI composing for you. It’s the AI doing research so you can focus on taste. You still choose what goes in; the algorithm just curates what’s available. It’s like having a record store clerk who actually knows your sound and never sleeps.

4. Use MIDI Assistants as Brainstorming Partners, Not Ghostwriters

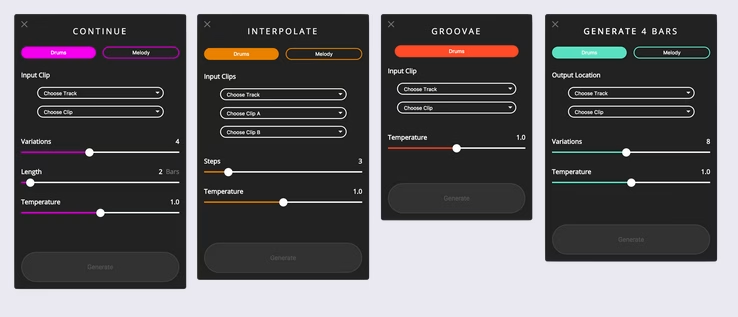

Magenta Studio and AIVA can generate MIDI patterns and melodies, and yes, they’re genuinely useful. But here’s the ethics: treat them as inspiration, not automation. 4

Magenta’s “Interpolate” feature takes two melodic ideas and generates variations. Use one. Edit three. Discard the rest. The final composition should sound like you, not like a statistical average of 10,000 songs in the training data. If you can’t tell where your creativity ends and the algorithm begins, you’re using it wrong.

Facebook’s Two-Link Limit: The End of Free Reach for Musicians

5. Smart Mixing Tools Are Your Silent Assistants (If You Let Them Be)

iZotope Neoverb, Plasma, and Aurora use machine learning to analyze your vocals or instruments and suggest processing chains. Reverb Assistant listens to your vocal and recommends reverb types. You approve, modify, or reject every suggestion. 5

This is assistive audio technology at its best: AI handles the boring math (frequency analysis, dynamic detection), you handle the creative decisions (vibe, space, character). You’re still the mixer; the tool is your intern.

6. Let AI Sequence Your DJ Sets While You Keep the Soul

DJ.Studio’s “Harmonize” feature suggests track order based on harmonic compatibility and energy flow. Harmonic mixing is a technical skill that takes years to develop by ear. AI democratizes it. 6

But here’s the thing: you still curate the set. The algorithm suggests an order; you review it, reorder tracks, hand-edit transitions. The final set is your creative statement, not the algorithm’s. There’s a massive difference between that and a pre-made DJ mix generated entirely by software—which is pure automation and worth avoiding.

7. Enhance Vocals. Never Clone Them.

AI vocal processing—de-esser, pitch correction, dynamic revival—is fair game. iZotope’s Unlimiter restores crushed transients to overly compressed vocals. It’s corrective, not generative.

But voice cloning? That’s the line. Using AI to recreate another artist’s voice without permission is a deepfake, full stop. It violates consent, copyright, and basic respect. The industry rightly called out Meta and OpenAI for training models on artist voices without permission. Don’t be that person. 7

8. Sound Design Exploration (Not Sound Design Replacement)

DDSP-VST and neural synthesis tools let you morph between sounds, explore timbral possibilities, and discover sonic spaces you’d never find manually. Use them to inspire new sounds, not to avoid actually designing sounds. 8

The Line in the Sand

Here’s what kills the industry: full-track generation (Suno, Udio), pre-made DJ mixes, and voice cloning. They automate away the parts of music that require taste, judgment, and years of listening. They’re also technically indefensible as “creative tools.”

Everything else? Fair game. Use AI to work faster, smarter, and with fewer distractions. Just make sure the final track sounds like you, not like a statistical composite of everyone else.

That’s not just ethical. It’s also how you stay relevant in a field where taste is the only thing machines can’t fake—yet.

- https://aristake.com/ai-tools-musicians-study/ ↩︎

- https://skywork.ai/blog/ai-agent/landr-review/ ↩︎

- https://imusician.pro/en/resources/blog/splice-new-ai-feature-allows-artists-to-match-their-own-loops-with-suitable-samples ↩︎

- https://slimegreenbeats.com/blogs/music/essential-ai-tools-for-music-producers-in-2025 ↩︎

- https://music.ai/news/music-tech/izotope-launches-ozone-12-ai-mastering-suite/ ↩︎

- https://dj.studio/blog/ai-mixing-apps ↩︎

- https://nhsjs.com/2025/the-impact-of-artificial-intelligence-on-music-production-creative-potential-ethical-dilemmas-and-the-future-of-the-industry/ ↩︎

- https://thelabelmachine.com/blog/music-ai-tools-list/ ↩︎

* generate randomized username

- "Enhance creativity"? i doubt it. it's more like enabling lazyness. i'm betting that in a few years, everything's gonna sound the same. ai might 'master' your track, but it won't make it good. also, bedroom producers have been doing alright without ai for years now.

- #1 Lord_Nikon [12]

- #2 Void_Reaper [10]

- #3 Cereal_Killer [10]

- #4 Dark_Pulse [9]

- #5 Void_Strike [8]

- #6 Phantom_Phreak [7]

- #7 Data_Drifter [7]

- #8 Cipher_Blade [6]